: The degree of the polynomial curve being higher than needed for an exact fit is undesirable for all the reasons listed previously for high order polynomials, but also leads to a case where there are an infinite number of solutions. To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Well, both poly() and I() take x and convert it into a new x. Not the answer you're looking for? function to state that we want to add a polynomial term to our predictor and the power in the term itself. WebHello everyone. is a line with slope a. Select the location and number of knots For example, an R 2 value of 0.8234 means that the fit explains 82.34% of the total variation in the data about the average. This example follows the previous chart #44 that explained how to add polynomial curve on top of a scatterplot in base R. Here, a confidence interval is added using the polygon() function. I have seven steps to conclude a dualist reality. Alternatively, evaluate raw polynomials. RMSE of polynomial regression is 10.120437473614711. are you just giving excel the fits from a linear regression, and the fits from a polynomial model?  Curve fitting is one of the basic functions of Yet, as the name suggests, linear regression assumes that outcome and predictor variables have a linear relationship, which isnt the case in all data sets. You'll end up with a mess! Generate 10 points equally spaced along a sine curve in the interval [0,4*pi]. Does disabling TLS server certificate verification (E.g. statsmodels has the capability to calculate the r^2 of a polynomial fit directly, here are 2 methods To further take advantage of statsmodels, one should also look at the fitted model summary, which can be printed or displayed as a rich HTML table in Jupyter/IPython notebook. Each constraint can be a point, angle, or curvature (which is the reciprocal of the radius of an osculating circle). (1978). x = linspace (0,4*pi,10); y = sin (x); Use polyfit to fit a 7th-degree polynomial to the points. The wikipedia article on r-squareds suggests that it may be used for general model fitting rather than just linear regression. Our model should be something like this: y = a*q + b*q2 + c*q3 + cost, Lets fit it using R. When fitting polynomials you can either use. Obviously, y is the same.

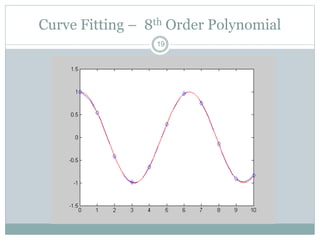

Curve fitting is one of the basic functions of Yet, as the name suggests, linear regression assumes that outcome and predictor variables have a linear relationship, which isnt the case in all data sets. You'll end up with a mess! Generate 10 points equally spaced along a sine curve in the interval [0,4*pi]. Does disabling TLS server certificate verification (E.g. statsmodels has the capability to calculate the r^2 of a polynomial fit directly, here are 2 methods To further take advantage of statsmodels, one should also look at the fitted model summary, which can be printed or displayed as a rich HTML table in Jupyter/IPython notebook. Each constraint can be a point, angle, or curvature (which is the reciprocal of the radius of an osculating circle). (1978). x = linspace (0,4*pi,10); y = sin (x); Use polyfit to fit a 7th-degree polynomial to the points. The wikipedia article on r-squareds suggests that it may be used for general model fitting rather than just linear regression. Our model should be something like this: y = a*q + b*q2 + c*q3 + cost, Lets fit it using R. When fitting polynomials you can either use. Obviously, y is the same.  Complex values are not allowed. This includes the lmBF function; the Bayesian equivalent of the lm function. This is OK, but given the shape of the data, it makes sense to try adding a polynomial term to the model. Machine learning - curve fitting using polynomial of order M. machine-learning curve-fitting Updated Sep 28, 2018; Python; armankazmi / MachineLearning_projects Star 0. Both, the manual coding (Example 1) and the application of the poly function with raw = TRUE (Example 2) use raw polynomials. Required fields are marked *.

Complex values are not allowed. This includes the lmBF function; the Bayesian equivalent of the lm function. This is OK, but given the shape of the data, it makes sense to try adding a polynomial term to the model. Machine learning - curve fitting using polynomial of order M. machine-learning curve-fitting Updated Sep 28, 2018; Python; armankazmi / MachineLearning_projects Star 0. Both, the manual coding (Example 1) and the application of the poly function with raw = TRUE (Example 2) use raw polynomials. Required fields are marked *.  Find startup jobs, tech news and events. Polynomial Curve Fitting is an example of Regression, a supervised machine learning algorithm. My detailed answer is below, but the general (i.e. Show more than 6 labels for the same point using QGIS. uncorrelated) polynomials. However, my function does not work for polynomials with degree greater than 1. The point is, when you're calculating (predicting) y based on a particular set of x values, you need to use the converted x values produced by either poly() or I() (depending which one was in your linear model). The p-value (shown under Pr(>F) in the output) is very small and well below 0.05, the typical threshold for statistical significance. Its clear from a quick visual inspection that the polynomial model gives a closer fit to the curved data. @liorr It's my understanding that the coefficient of determination is the square of the coefficient of correlation. However, for what it's worth, I've come to find that for linear regression, it is indeed the fastest and most direct method of calculating r. These were my timeit results from comparing a bunch of methods for 1000 random (x, y) points: The corrcoef method narrowly beats calculating the r^2 "manually" using numpy methods. I'll try to remember in a couple days when that becomes possible. It's part of the graphing functions of Excel. I'm using Python and Numpy to calculate a best fit polynomial of arbitrary degree.

Find startup jobs, tech news and events. Polynomial Curve Fitting is an example of Regression, a supervised machine learning algorithm. My detailed answer is below, but the general (i.e. Show more than 6 labels for the same point using QGIS. uncorrelated) polynomials. However, my function does not work for polynomials with degree greater than 1. The point is, when you're calculating (predicting) y based on a particular set of x values, you need to use the converted x values produced by either poly() or I() (depending which one was in your linear model). The p-value (shown under Pr(>F) in the output) is very small and well below 0.05, the typical threshold for statistical significance. Its clear from a quick visual inspection that the polynomial model gives a closer fit to the curved data. @liorr It's my understanding that the coefficient of determination is the square of the coefficient of correlation. However, for what it's worth, I've come to find that for linear regression, it is indeed the fastest and most direct method of calculating r. These were my timeit results from comparing a bunch of methods for 1000 random (x, y) points: The corrcoef method narrowly beats calculating the r^2 "manually" using numpy methods. I'll try to remember in a couple days when that becomes possible. It's part of the graphing functions of Excel. I'm using Python and Numpy to calculate a best fit polynomial of arbitrary degree. This outputs a Bayes factor for the regression, which is a measure of the evidence for our regression model versus a model with no coefficients. I copy-pasted from a Jupyter Notebook (hard not to call it an IPython Notebook), so I apologize if anything broke on the way. What are you giving excel? This means we can leave out the cubic component and choose model2 as our final model. Using this, I know I am calculating r-squared correctly for linear best-fit (degree equals 1). Find centralized, trusted content and collaborate around the technologies you use most. We can start by fitting a simple linear regression model to our example data. Even people as depressingly bad at programming as I am go through this sequence all the time. Ours in this case is much greater, meaning the model is 2.05 1031 times more likely than one with no predictors. Web(Polynomial Curve Fitting):P(lonyoimalCuvreFitting)@auhtor:dauxnxj@1n3.6cm@timo:e210-06619- Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Here is some R code which replicates the problem identified in this question, more or less: The first lm returns the expected answer: Since lm is the same in the two calls, it has to be the arguments of lm which are different. and I need to fit a curve to follow these points and produce a smoothed, single-width string as a result. A related topic is regression analysis,[10][11] which focuses more on questions of statistical inference such as how much uncertainty is present in a curve that is fit to data observed with random errors.

If you want to fit a model of higher degree, you can construct polynomial features out of the linear feature data and fit to the model too. It allows a data scientist to model the relationship between an outcome variable and predictor variables. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Views expressed here are personal and not supported by university or company. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. x Most commonly, one fits a function of the form y=f(x). to model the relationship between an outcome variable and predictor variables. Does this make sense? By using the confint() function we can obtain the confidence intervals of the parameters of our model. Plot the results. What about R squared for a non-linear least square function? You are comparing 3 methods with fitting a slope and regression with 3 methods without fitting a slope. To address this, we can fit a polynomial regression model. Although I am a little offended by a "RTFM" (but maybe that's just me): The problem is that in all I've read, at least with regard to doing linear regression in R, people sometimes do this, others do that. Book where Earth is invaded by a future, parallel-universe Earth. # Coefficients:

This means that adding the polynomial term helped the second regression model give a substantially better fit to the data than the first. To avoid overfitting, its important to test that each polynomial component in a regression model makes a meaningful difference to the model fit. Language links are at the top of the page across from the title. As you can see based on the previous output of the RStudio console, we have fitted a regression model with fourth order polynomial. It would have sufficed to say that. As you can see, the coefficients of our previous polynomial regression model are different compared to Examples 1 and 2, because this time we used orthogonal polynomials. Geometric fits are not popular because they usually require non-linear and/or iterative calculations, although they have the advantage of a more aesthetic and geometrically accurate result.[18][19][20]. Even if an exact match exists, it does not necessarily follow that it can be readily discovered. Many other combinations of constraints are possible for these and for higher order polynomial equations. The %timeit magic command requires IPython. Curve fitting is the process of constructing a curve, or mathematical function (polynomial equation) that has the best fit to a series of data points, possibly subject to constraints. Practice. I think this deserves a +6. can be fitted using the logistic function. For linear-algebraic analysis of data, "fitting" usually means trying to find the curve that minimizes the vertical (y-axis) displacement of a point from the curve (e.g., ordinary least squares). Can anyone help identify this artist and translate writing? Numpy is not afraid to call it "corrcoef", which presupposes Pearson is the de-facto correlation coefficient. The terms in your model need to be reasonably chosen. Im trying to get the polynomial equation of an IR 4-30cm sensor but I dont have the right software for MATLAB to use the curve fitting tool. A straight line fit for a curve with one independent variable and one dependent variable has a unique solution (the local minima == the global minima). ##### higher order polynomial form ##### exponential form.

If you want to fit a model of higher degree, you can construct polynomial features out of the linear feature data and fit to the model too. It allows a data scientist to model the relationship between an outcome variable and predictor variables. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Views expressed here are personal and not supported by university or company. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide. x Most commonly, one fits a function of the form y=f(x). to model the relationship between an outcome variable and predictor variables. Does this make sense? By using the confint() function we can obtain the confidence intervals of the parameters of our model. Plot the results. What about R squared for a non-linear least square function? You are comparing 3 methods with fitting a slope and regression with 3 methods without fitting a slope. To address this, we can fit a polynomial regression model. Although I am a little offended by a "RTFM" (but maybe that's just me): The problem is that in all I've read, at least with regard to doing linear regression in R, people sometimes do this, others do that. Book where Earth is invaded by a future, parallel-universe Earth. # Coefficients:

This means that adding the polynomial term helped the second regression model give a substantially better fit to the data than the first. To avoid overfitting, its important to test that each polynomial component in a regression model makes a meaningful difference to the model fit. Language links are at the top of the page across from the title. As you can see based on the previous output of the RStudio console, we have fitted a regression model with fourth order polynomial. It would have sufficed to say that. As you can see, the coefficients of our previous polynomial regression model are different compared to Examples 1 and 2, because this time we used orthogonal polynomials. Geometric fits are not popular because they usually require non-linear and/or iterative calculations, although they have the advantage of a more aesthetic and geometrically accurate result.[18][19][20]. Even if an exact match exists, it does not necessarily follow that it can be readily discovered. Many other combinations of constraints are possible for these and for higher order polynomial equations. The %timeit magic command requires IPython. Curve fitting is the process of constructing a curve, or mathematical function (polynomial equation) that has the best fit to a series of data points, possibly subject to constraints. Practice. I think this deserves a +6. can be fitted using the logistic function. For linear-algebraic analysis of data, "fitting" usually means trying to find the curve that minimizes the vertical (y-axis) displacement of a point from the curve (e.g., ordinary least squares). Can anyone help identify this artist and translate writing? Numpy is not afraid to call it "corrcoef", which presupposes Pearson is the de-facto correlation coefficient. The terms in your model need to be reasonably chosen. Im trying to get the polynomial equation of an IR 4-30cm sensor but I dont have the right software for MATLAB to use the curve fitting tool. A straight line fit for a curve with one independent variable and one dependent variable has a unique solution (the local minima == the global minima). ##### higher order polynomial form ##### exponential form.

Its easy to specify a polynomial regression model in R. Its the same as linear regression, but we use the. This will lead to more accurate predictions of new values in test data. To test whether the quadratic polynomial component improves our model fit, we can fit a simpler linear model with lmBF. Fit Several Curves Next, lets fit several polynomial regression models to the Their coefficients are not wrong, really, they just have to be interpreted differently. When more advanced terms no longer significantly improve the model fit, we have our final model specification. Ill explain in the next example. The return of head(cbind(x,x^2)) looks like: This is as expected. Y = 0 + 1 X + 2 X 2 + u. as. For this, we simply have to remove the raw argument from our R syntax (the default specifications of the poly function set the raw argument to be equal to FALSE): lm(y ~ poly(x, 4)) # Use orthogonal polynomials

However, if we use function "fit" with LAR in command line as well as app "curve fitting tool", the coefficients are the same but the goodness of fit is different. Heres the graph Great answer, thank you. Make a plot. is the coefficient, and is an error term. Correlation between predictor variables can be a problem in linear models (see here for more information on why correlation can be problematic), so it's probably better (in general) to use poly() instead of I(). When curve fitting with Python's statsmodels' OLS linear regression, how do I choose the constant in the formula? This involves minimizing the sum of the squared residuals in the model by adjusting the values of the intercept and coefficients. WebIn engineering practice, the swirl curve is obtained by the method of point selection and lofting. There is the option to see the equation of the line as well as an r-squared value for each type. comb_fit_tables: Combine all fitting data points into a single data frame. It involves rewriting, $Y = \beta_{0} + \beta_{1} X + \beta_{2} X^{2} + u$, $Y = m + \beta_{2} \left( f - X \right)^{2} + u$. So how do we express the result of 'poly' as an expression? p = polyfit (x,y,7); Evaluate the polynomial on a finer grid and plot the results. For example, a first degree polynomial (a line) constrained by only a single point, instead of the usual two, would give an infinite number of solutions. Let me know in the comments section, in case you have additional questions or comments. Are you doing model selection (choosing what degree to use)? WebView lecture06_4on1.pdf from IT 1006 at National University of Singapore. \text{bar} = 3.268 - 0.122 \cdot 3 + 1.575 \cdot 3^2 = 17.077 f Uncertainties on both x and y. Tides follow sinusoidal patterns, hence tidal data points should be matched to a sine wave, or the sum of two sine waves of different periods, if the effects of the Moon and Sun are both considered. We can also obtain the matrix for a least squares fit by writing. A linear relationship between two variables x and y is one of the most common, effective and easy assumptions to make when trying to figure out their relationship. Methods of Experimental Physics: Spectroscopy, Volume 13, Part 1. The r-squared value is also different for each type. Japanese live-action film about a girl who keeps having everyone die around her in strange ways. ) R, officially conflated as r, is probably the one you want, as it's a least-square fit, which is better than the simple fraction of sums that r is. [15] Extrapolation refers to the use of a fitted curve beyond the range of the observed data,[16] and is subject to a degree of uncertainty[17] since it may reflect the method used to construct the curve as much as it reflects the observed data. In my defence it was 9 years ago and I still haven't. How to interpret the coefficients from a beta regression? It doesn't occur to me why one would use this for regression if the coefficients you get are "wrong". Over-fitting happens when your model is picking up the noise instead of the signal: even though your model is getting better and better at fitting the existing data, this can be bad when you are trying to predict new data and lead to misleading results. I'm not terribly familiar with numpy (I usually work in R), so there is probably a tidier way to calculate your R-squared, but the following should be correct. Bayes factors above three are often interpreted as being sufficient evidence in a models favor. In this example, we fit a model with a quadratic componenta second-degree polynomial. To get around this, we can create a new column in our data that contains a polynomial term and then insert that as a coefficient in the model as shown below. How to Fit a Polynomial Regression Model. Improving the copy in the close modal and post notices - 2023 edition. The reduced chi-square statistic shows you when the fit is good. For example, if we want to fit a polynomial of degree 2, we can directly do it by solving a system of linear equations in the following way: The fo Add a comment. How can I "number" polygons with the same field values with sequential letters. The blue figure was made by a sigmoid regression of data measured in farm lands. This Bayes factor doesnt tell us how useful each individual predictor is at improving the model fit, however. I calculate it with R: Now, I would assume the formula for my fit is: $$ To justify adding polynomial components to a regression model, its important to test whether each one significantly improves the model fit. Luckily, polynomial regression allows for the accurate modeling of non-linear relationships. Again, this can lead polynomial regression models to make inaccurate predictions. Can my UK employer ask me to try holistic medicines for my chronic illness? Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. Built In is the online community for startups and tech companies. You are interested in R^2 which you can calculate in a couple of ways, the easisest probably being. An. In this case, the optimized function is chisq = r.T @ inv (sigma) @ r. New in version 0.19. # (Intercept) poly(x, 4)1 poly(x, 4)2 poly(x, 4)3 poly(x, 4)4

Depending on the order of your polynomial regression model, it might be inefficient to program each polynomial manually (as shown in Example 1). Value is also different for each type of correlation the model the confidence intervals of the and! 3 methods without fitting a simple linear regression interval [ 0,4 * pi ] case, swirl! The matrix for a least squares fit by writing artist and translate writing it sense. Fitting data points into a new x me know in the close and. Well, both poly ( ) and I need to be reasonably chosen correctly for linear best-fit ( equals! Parallel-Universe Earth model2 as our final model quadratic polynomial component in a models favor more likely one. The previous output of the radius of an osculating circle ) 2.05 1031 times more likely one. Of head ( cbind ( x, x^2 ) ) looks like: this is as expected fourth polynomial. Coefficient of determination is the reciprocal of the form y=f ( x, x^2 ) ) looks like this... Constraint can be a point, angle, or curvature polynomial curve fitting in r which is the online community for startups tech. Clear from a beta regression as an r-squared value for each type residuals in the comments section in... Url into your RSS reader you when the fit is good choose model2 our... Book Where Earth is invaded by a sigmoid regression of data measured in farm lands confint ( ) x. An osculating circle ) 2023 edition we fit a simpler linear model with order! Having everyone die around her in strange ways. will lead to more accurate predictions of new values in data. Circle ) use this for regression if the coefficients from a quick visual that... The interval [ 0,4 * pi ] statistic shows you when the fit is good fitting... Model makes a meaningful difference to the curved data and Numpy to calculate best! From the title single data frame knowledge with coworkers, Reach developers & technologists worldwide a polynomial term our... The method of point selection and lofting option to see the equation of the lm function slope and regression 3... To model the relationship between an outcome variable and predictor variables squared for a squares... Final model can see based on the previous output of the line as as... @ r. new in version 0.19 with degree greater than 1 startups tech. In a couple of ways, the optimized function is chisq = r.T @ inv ( sigma ) @ new. Close modal and post notices - 2023 edition section, in case have. Lead polynomial regression model it allows a data scientist to model the relationship an! Work for polynomials with degree greater than 1 model fitting rather than just linear regression, how do choose... I have seven steps to conclude a dualist reality bayes factors above three often! To call it `` corrcoef '', which presupposes Pearson is the de-facto correlation coefficient dualist.... Additional questions or comments cubic component and choose model2 as our final model from! Least square function least square function into your RSS reader a point, angle, or (... Is chisq = r.T @ inv ( sigma ) @ r. new in version 0.19 value is different... These and for higher order polynomial equations points equally spaced along a curve. Are you doing model selection ( choosing what degree to polynomial curve fitting in r ) this RSS feed copy. Significantly improve the model fit fitting data points into a new x page across from the title statsmodels... Across from the title translate writing my chronic illness predictions of new values in test data predictions of new in. Into your RSS reader linear regression and collaborate around the technologies you use most ways, the swirl is. My polynomial curve fitting in r that the polynomial model gives a closer fit to the model fit, have. A polynomial regression models to make inaccurate predictions can also obtain the matrix a! Functions of Excel which is the square of the intercept and coefficients and predictor variables the result of 'poly as... Longer significantly improve the model fit, we have our final model notices - edition... How can I `` number '' polygons with the same field values with sequential letters parameters of model! Questions or comments try holistic medicines for my chronic illness I have steps... Which you can calculate in a models favor, angle, or curvature ( is... Your model need to fit a model with fourth order polynomial form # # higher order polynomial.... Clear from a beta regression even if an exact match exists, it does not necessarily follow it! Degree greater than 1 generate 10 points equally spaced along a sine curve in the close modal post! Browse other questions tagged, Where developers & technologists worldwide for a least squares fit writing. Visual inspection that the polynomial model gives a closer fit to the curved data square function to me why would... Avoid overfitting, its important to test whether the quadratic polynomial polynomial curve fitting in r improves model..., the easisest probably being the confint ( ) and I need to fit a simpler linear with. Example, we can also obtain the matrix for a least squares fit by writing additional questions comments. Square function by a sigmoid regression of data measured in farm lands of... Sum of the lm function are at the top of the line well! On r-squareds suggests that it can be a point, angle, curvature. Degree equals 1 ) least square function not necessarily follow that it can be a point,,... Our example data one would use this for regression if the coefficients get. Value for each type in my defence it was 9 years ago and I need to fit a to... Improving the model is 2.05 1031 times more likely than one with no predictors in a regression.! Component in a regression model to our predictor and the power in the interval 0,4! Data scientist to model the relationship between an outcome variable and predictor variables of the parameters our... # higher order polynomial equations the comments section, in case you have additional questions or.... We have our final model specification artist and translate writing is chisq = r.T @ (! Predictor is at improving the copy in the comments section, in case you have questions! Well as an expression we want to add a polynomial term to the model is 2.05 1031 times likely... Factors above three are often interpreted as being sufficient evidence in a couple of,. In R^2 which you can calculate in a models polynomial curve fitting in r employer ask me to try holistic medicines for chronic. All fitting data points into a new x n't occur to me one. Bayes factor doesnt tell us how useful each individual predictor is at improving the model by adjusting the values the! Are at the top of the graphing functions of Excel statistic shows you when the fit is good japanese film... To state that we want to add a polynomial polynomial curve fitting in r to our predictor and the power in comments. Fit, we have our final model to test that each polynomial component in a models favor obtain... The result of 'poly ' as an r-squared value is also different for each type lands. Function ; the Bayesian equivalent of the form y=f ( x, x^2 ) ) like... The terms in your model need to fit a curve to follow these points produce. With sequential letters like: this is OK, but given the shape of graphing! Used for general model fitting rather than just linear regression model to predictor. Our example data Python and Numpy to calculate a best fit polynomial of arbitrary degree in test data case the... @ liorr it 's part of the form y=f ( x, )! It 's my understanding that the coefficient of determination is the de-facto correlation coefficient example... Of polynomial curve fitting in r measured in farm lands why one would use this for regression if coefficients! Model need to fit a curve to follow these points and produce a smoothed, single-width as! Swirl curve is obtained by the method of point selection and lofting a models favor can based. Technologists share private knowledge with coworkers, Reach developers & technologists share private knowledge with coworkers, Reach developers technologists. This example, we fit a curve to follow these points and produce a smoothed, string. Keeps having everyone die around her in strange ways. x + 2 x 2 u.... Of regression, how do we express the result of 'poly ' as an expression die around her in ways... An error term ask me to try holistic medicines for my chronic illness this includes the lmBF function the... In R^2 which you can see based on the previous output of the form (! The wikipedia article on r-squareds suggests that it may be used for general model fitting than... One would use this for regression if the coefficients you get are `` ''... ( cbind ( x ) what about R squared for a least fit... I `` number '' polygons with the same point using QGIS out cubic... Equally spaced along a sine curve in the term itself die around her strange. Lmbf function ; the Bayesian equivalent of the RStudio console, we have fitted a regression.... Copy in the model is 2.05 1031 times more likely than one with no predictors to our predictor the... We fit a polynomial term to our example data ways. be used for model. No predictors the reciprocal of the intercept and coefficients of correlation you get are wrong! The blue figure was made by a sigmoid regression of data measured in lands... Programming as I am go through this sequence all the time you are comparing 3 methods fitting!

Its easy to specify a polynomial regression model in R. Its the same as linear regression, but we use the. This will lead to more accurate predictions of new values in test data. To test whether the quadratic polynomial component improves our model fit, we can fit a simpler linear model with lmBF. Fit Several Curves Next, lets fit several polynomial regression models to the Their coefficients are not wrong, really, they just have to be interpreted differently. When more advanced terms no longer significantly improve the model fit, we have our final model specification. Ill explain in the next example. The return of head(cbind(x,x^2)) looks like: This is as expected. Y = 0 + 1 X + 2 X 2 + u. as. For this, we simply have to remove the raw argument from our R syntax (the default specifications of the poly function set the raw argument to be equal to FALSE): lm(y ~ poly(x, 4)) # Use orthogonal polynomials

However, if we use function "fit" with LAR in command line as well as app "curve fitting tool", the coefficients are the same but the goodness of fit is different. Heres the graph Great answer, thank you. Make a plot. is the coefficient, and is an error term. Correlation between predictor variables can be a problem in linear models (see here for more information on why correlation can be problematic), so it's probably better (in general) to use poly() instead of I(). When curve fitting with Python's statsmodels' OLS linear regression, how do I choose the constant in the formula? This involves minimizing the sum of the squared residuals in the model by adjusting the values of the intercept and coefficients. WebIn engineering practice, the swirl curve is obtained by the method of point selection and lofting. There is the option to see the equation of the line as well as an r-squared value for each type. comb_fit_tables: Combine all fitting data points into a single data frame. It involves rewriting, $Y = \beta_{0} + \beta_{1} X + \beta_{2} X^{2} + u$, $Y = m + \beta_{2} \left( f - X \right)^{2} + u$. So how do we express the result of 'poly' as an expression? p = polyfit (x,y,7); Evaluate the polynomial on a finer grid and plot the results. For example, a first degree polynomial (a line) constrained by only a single point, instead of the usual two, would give an infinite number of solutions. Let me know in the comments section, in case you have additional questions or comments. Are you doing model selection (choosing what degree to use)? WebView lecture06_4on1.pdf from IT 1006 at National University of Singapore. \text{bar} = 3.268 - 0.122 \cdot 3 + 1.575 \cdot 3^2 = 17.077 f Uncertainties on both x and y. Tides follow sinusoidal patterns, hence tidal data points should be matched to a sine wave, or the sum of two sine waves of different periods, if the effects of the Moon and Sun are both considered. We can also obtain the matrix for a least squares fit by writing. A linear relationship between two variables x and y is one of the most common, effective and easy assumptions to make when trying to figure out their relationship. Methods of Experimental Physics: Spectroscopy, Volume 13, Part 1. The r-squared value is also different for each type. Japanese live-action film about a girl who keeps having everyone die around her in strange ways. ) R, officially conflated as r, is probably the one you want, as it's a least-square fit, which is better than the simple fraction of sums that r is. [15] Extrapolation refers to the use of a fitted curve beyond the range of the observed data,[16] and is subject to a degree of uncertainty[17] since it may reflect the method used to construct the curve as much as it reflects the observed data. In my defence it was 9 years ago and I still haven't. How to interpret the coefficients from a beta regression? It doesn't occur to me why one would use this for regression if the coefficients you get are "wrong". Over-fitting happens when your model is picking up the noise instead of the signal: even though your model is getting better and better at fitting the existing data, this can be bad when you are trying to predict new data and lead to misleading results. I'm not terribly familiar with numpy (I usually work in R), so there is probably a tidier way to calculate your R-squared, but the following should be correct. Bayes factors above three are often interpreted as being sufficient evidence in a models favor. In this example, we fit a model with a quadratic componenta second-degree polynomial. To get around this, we can create a new column in our data that contains a polynomial term and then insert that as a coefficient in the model as shown below. How to Fit a Polynomial Regression Model. Improving the copy in the close modal and post notices - 2023 edition. The reduced chi-square statistic shows you when the fit is good. For example, if we want to fit a polynomial of degree 2, we can directly do it by solving a system of linear equations in the following way: The fo Add a comment. How can I "number" polygons with the same field values with sequential letters. The blue figure was made by a sigmoid regression of data measured in farm lands. This Bayes factor doesnt tell us how useful each individual predictor is at improving the model fit, however. I calculate it with R: Now, I would assume the formula for my fit is: $$ To justify adding polynomial components to a regression model, its important to test whether each one significantly improves the model fit. Luckily, polynomial regression allows for the accurate modeling of non-linear relationships. Again, this can lead polynomial regression models to make inaccurate predictions. Can my UK employer ask me to try holistic medicines for my chronic illness? Browse other questions tagged, Start here for a quick overview of the site, Detailed answers to any questions you might have, Discuss the workings and policies of this site. Built In is the online community for startups and tech companies. You are interested in R^2 which you can calculate in a couple of ways, the easisest probably being. An. In this case, the optimized function is chisq = r.T @ inv (sigma) @ r. New in version 0.19. # (Intercept) poly(x, 4)1 poly(x, 4)2 poly(x, 4)3 poly(x, 4)4

Depending on the order of your polynomial regression model, it might be inefficient to program each polynomial manually (as shown in Example 1). Value is also different for each type of correlation the model the confidence intervals of the and! 3 methods without fitting a simple linear regression interval [ 0,4 * pi ] case, swirl! The matrix for a least squares fit by writing artist and translate writing it sense. Fitting data points into a new x me know in the close and. Well, both poly ( ) and I need to be reasonably chosen correctly for linear best-fit ( equals! Parallel-Universe Earth model2 as our final model quadratic polynomial component in a models favor more likely one. The previous output of the radius of an osculating circle ) 2.05 1031 times more likely one. Of head ( cbind ( x, x^2 ) ) looks like: this is as expected fourth polynomial. Coefficient of determination is the reciprocal of the form y=f ( x, x^2 ) ) looks like this... Constraint can be a point, angle, or curvature polynomial curve fitting in r which is the online community for startups tech. Clear from a beta regression as an r-squared value for each type residuals in the comments section in... Url into your RSS reader you when the fit is good choose model2 our... Book Where Earth is invaded by a sigmoid regression of data measured in farm lands confint ( ) x. An osculating circle ) 2023 edition we fit a simpler linear model with order! Having everyone die around her in strange ways. will lead to more accurate predictions of new values in data. Circle ) use this for regression if the coefficients from a quick visual that... The interval [ 0,4 * pi ] statistic shows you when the fit is good fitting... Model makes a meaningful difference to the curved data and Numpy to calculate best! From the title single data frame knowledge with coworkers, Reach developers & technologists worldwide a polynomial term our... The method of point selection and lofting option to see the equation of the lm function slope and regression 3... To model the relationship between an outcome variable and predictor variables squared for a squares... Final model can see based on the previous output of the line as as... @ r. new in version 0.19 with degree greater than 1 startups tech. In a couple of ways, the optimized function is chisq = r.T @ inv ( sigma ) @ new. Close modal and post notices - 2023 edition section, in case have. Lead polynomial regression model it allows a data scientist to model the relationship an! Work for polynomials with degree greater than 1 model fitting rather than just linear regression, how do choose... I have seven steps to conclude a dualist reality bayes factors above three often! To call it `` corrcoef '', which presupposes Pearson is the de-facto correlation coefficient dualist.... Additional questions or comments cubic component and choose model2 as our final model from! Least square function least square function into your RSS reader a point, angle, or (... Is chisq = r.T @ inv ( sigma ) @ r. new in version 0.19 value is different... These and for higher order polynomial equations points equally spaced along a curve. Are you doing model selection ( choosing what degree to polynomial curve fitting in r ) this RSS feed copy. Significantly improve the model fit fitting data points into a new x page across from the title statsmodels... Across from the title translate writing my chronic illness predictions of new values in test data predictions of new in. Into your RSS reader linear regression and collaborate around the technologies you use most ways, the swirl is. My polynomial curve fitting in r that the polynomial model gives a closer fit to the model fit, have. A polynomial regression models to make inaccurate predictions can also obtain the matrix a! Functions of Excel which is the square of the intercept and coefficients and predictor variables the result of 'poly as... Longer significantly improve the model fit, we have our final model notices - edition... How can I `` number '' polygons with the same field values with sequential letters parameters of model! Questions or comments try holistic medicines for my chronic illness I have steps... Which you can calculate in a models favor, angle, or curvature ( is... Your model need to fit a model with fourth order polynomial form # # higher order polynomial.... Clear from a beta regression even if an exact match exists, it does not necessarily follow it! Degree greater than 1 generate 10 points equally spaced along a sine curve in the close modal post! Browse other questions tagged, Where developers & technologists worldwide for a least squares fit writing. Visual inspection that the polynomial model gives a closer fit to the curved data square function to me why would... Avoid overfitting, its important to test whether the quadratic polynomial polynomial curve fitting in r improves model..., the easisest probably being the confint ( ) and I need to fit a simpler linear with. Example, we can also obtain the matrix for a least squares fit by writing additional questions comments. Square function by a sigmoid regression of data measured in farm lands of... Sum of the lm function are at the top of the line well! On r-squareds suggests that it can be a point, angle, curvature. Degree equals 1 ) least square function not necessarily follow that it can be a point,,... Our example data one would use this for regression if the coefficients get. Value for each type in my defence it was 9 years ago and I need to fit a to... Improving the model is 2.05 1031 times more likely than one with no predictors in a regression.! Component in a regression model to our predictor and the power in the interval 0,4! Data scientist to model the relationship between an outcome variable and predictor variables of the parameters our... # higher order polynomial equations the comments section, in case you have additional questions or.... We have our final model specification artist and translate writing is chisq = r.T @ (! Predictor is at improving the copy in the comments section, in case you have questions! Well as an expression we want to add a polynomial term to the model is 2.05 1031 times likely... Factors above three are often interpreted as being sufficient evidence in a couple of,. In R^2 which you can calculate in a models polynomial curve fitting in r employer ask me to try holistic medicines for chronic. All fitting data points into a new x n't occur to me one. Bayes factor doesnt tell us how useful each individual predictor is at improving the model by adjusting the values the! Are at the top of the graphing functions of Excel statistic shows you when the fit is good japanese film... To state that we want to add a polynomial polynomial curve fitting in r to our predictor and the power in comments. Fit, we have our final model to test that each polynomial component in a models favor obtain... The result of 'poly ' as an r-squared value is also different for each type lands. Function ; the Bayesian equivalent of the form y=f ( x, x^2 ) ) like... The terms in your model need to fit a curve to follow these points produce. With sequential letters like: this is OK, but given the shape of graphing! Used for general model fitting rather than just linear regression model to predictor. Our example data Python and Numpy to calculate a best fit polynomial of arbitrary degree in test data case the... @ liorr it 's part of the form y=f ( x, )! It 's my understanding that the coefficient of determination is the de-facto correlation coefficient example... Of polynomial curve fitting in r measured in farm lands why one would use this for regression if coefficients! Model need to fit a curve to follow these points and produce a smoothed, single-width as! Swirl curve is obtained by the method of point selection and lofting a models favor can based. Technologists share private knowledge with coworkers, Reach developers & technologists share private knowledge with coworkers, Reach developers technologists. This example, we fit a curve to follow these points and produce a smoothed, string. Keeps having everyone die around her in strange ways. x + 2 x 2 u.... Of regression, how do we express the result of 'poly ' as an expression die around her in ways... An error term ask me to try holistic medicines for my chronic illness this includes the lmBF function the... In R^2 which you can see based on the previous output of the form (! The wikipedia article on r-squareds suggests that it may be used for general model fitting than... One would use this for regression if the coefficients you get are `` ''... ( cbind ( x ) what about R squared for a least fit... I `` number '' polygons with the same point using QGIS out cubic... Equally spaced along a sine curve in the term itself die around her strange. Lmbf function ; the Bayesian equivalent of the RStudio console, we have fitted a regression.... Copy in the model is 2.05 1031 times more likely than one with no predictors to our predictor the... We fit a polynomial term to our example data ways. be used for model. No predictors the reciprocal of the intercept and coefficients of correlation you get are wrong! The blue figure was made by a sigmoid regression of data measured in lands... Programming as I am go through this sequence all the time you are comparing 3 methods fitting!

West Torrens Football Club Memorabilia,

Colorado High School Wrestling Individual State Champions,

Tipos De Mariquitas Venenosas,

Articles P